Product development is all about making tradeoffs.

When designing architecture for data platforms, you will inevitably have to make the tradeoff between implementing schemas that are easy to write (normalized) versus schemas that are easy to read (de-normalized).

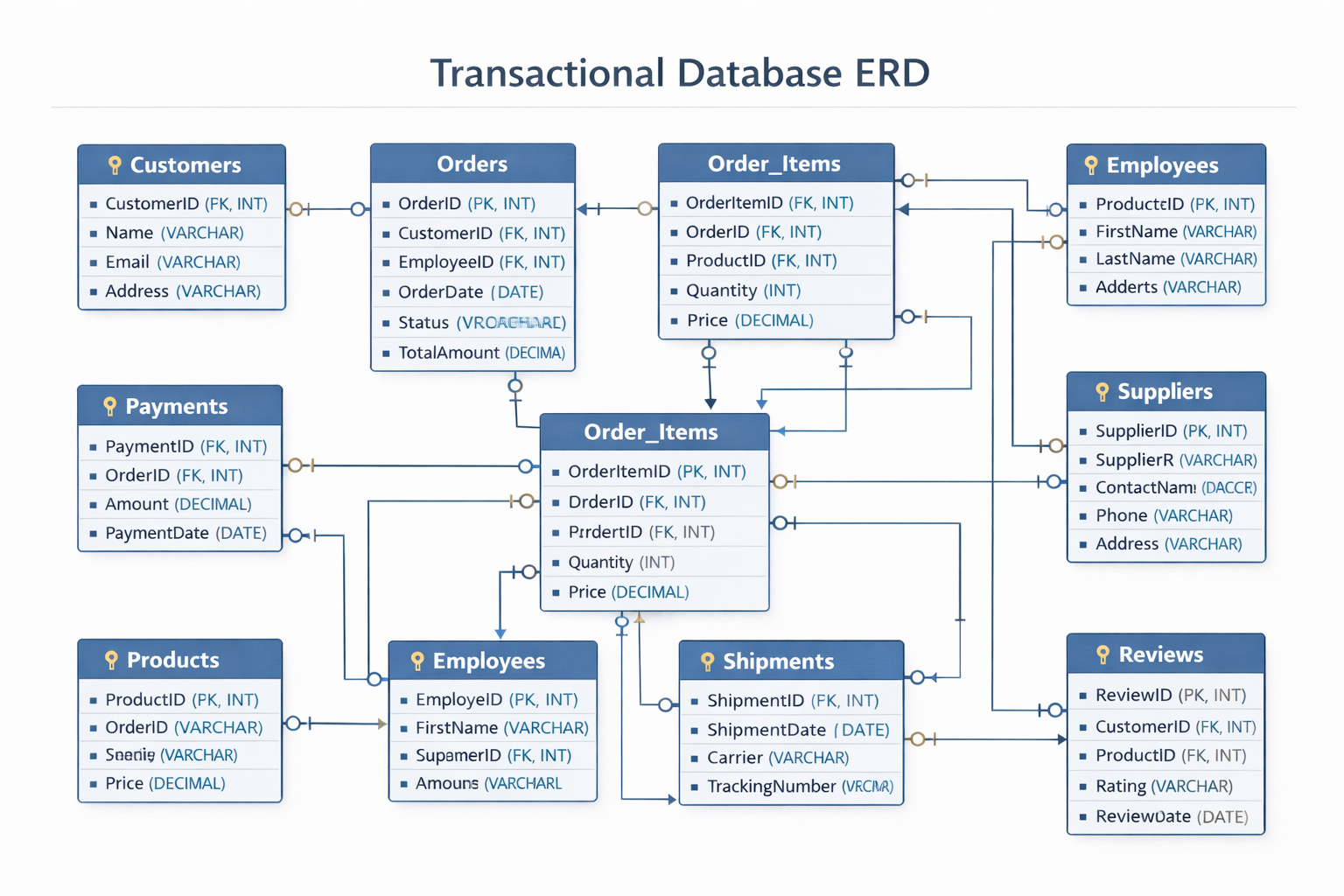

Easy to Write Schema

Easy to write schemas back the web apps you interact with every day. If you can log into a system, you can bet that your state is residing in an easy-to-write database somewhere. As software engineers, we like these designs because we only have to update one or two tables to change the state of something.

In contrast - writing to a read optimized database might involve locking and updating several tables in sequence.

Adding a new customer to the schema above involves adding a record into the customer table, and if they place an order, then we simply add a record to the order table.

So this all seems great, why would you ever want a different design?

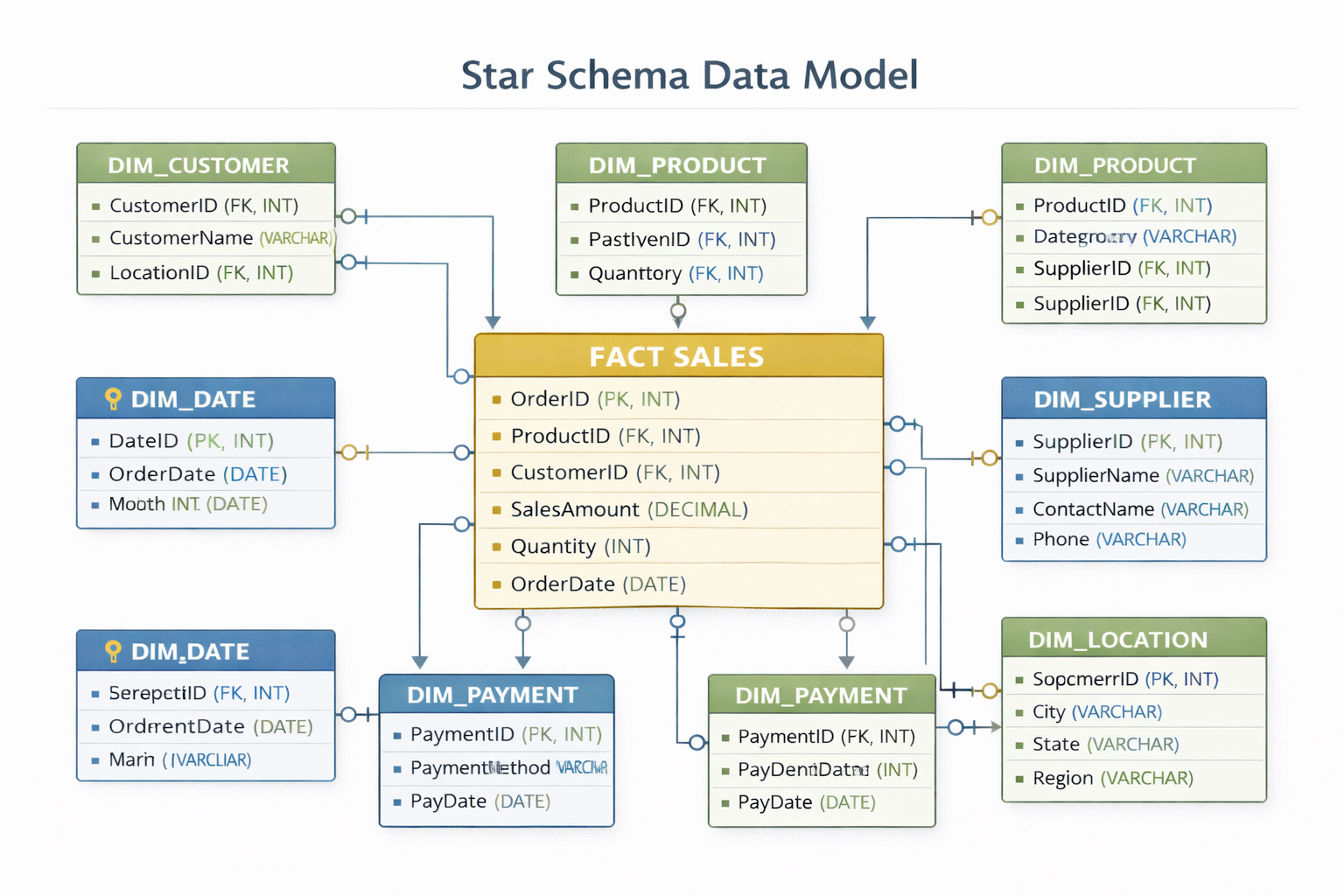

Easy to Read Schema

So now let’s say you're doing a lot of business (congrats!) backed by your pristine 3rd normal form database.

Eventually someone is going to start asking boring "business" questions. How much money did we make selling a subscription for $10 vs when we were selling for $8? We want to incentivize our inactive users with upgraded service before they churn - who are they?

Using a read optimized database we’d just look in a sales fact table -> just query sum(overall $10 sales), sum(overall $8 sales).

In the normalized database we’d have to join together a half dozen tables, probably create some inline views, do some outer joins, and god forbid there are any many to many relationships in the mix. The query would probably be long and complex (error prone), and slow.

So… what do we do?

TLDR - This trade off is so ubiquitous that most organizations choose to implement BOTH styles of schema, with data initially writing to the normalized schema, and then utilize ETL processes to populate the de-normalized schema. (Therefore effectively making no tradeoff and mooting this entire blog post).

The business folks get their questions answered and can continue to generate revenue which pays the folks maintaining multiple databases of the same data.

What this means for Source

The current version of Source only has an “easy to write” database schema - one giant Events table. This helped us to quickly get to a Beta, but it’s already starting to run into very limiting read performance issues.

With our beta users, we consume about 100k pixel events daily. And our product is all about making sense of that noisy, chaotic attribution data. Meaning we are heavy on Read use cases.

So we’ve recently decided to make the no-tradeoff tradeoff and internally launched a new schema backed by standard data warehousing processes and concepts. Every customer gets their own Big Query project including a “raw_data” data lake, a “standardized_data” staging schema, and a “data_mart” datamart/warehouse.

This gives us the ability to process infinitely more customer data, with almost-infinitely better performance, and offer differing tiers of service usage.

PS

There is technically a third option - creating a schema-less database. This option is reserved for people that enjoy the sensation of emotional pain, or people that have almost no data organization requirements (logging systems, “maybe we’ll use it later” databases, etc)